The automotive industry is rapidly undergoing tremendous advances. Cars with autopilot software are being allowed on the roads more and more, provided that roads meet certain standards. That’s why engineers are intensively working on AI systems that monitor roads and warn drivers against danger. But what about systems that monitor drivers’ behavior and are available for drivers of traditional cars as well?

The risk of car accidents increases when drivers are drowsy or distracted. Researchers have found that severe sleep deprivation decreases attention and concentration to the same extent as alcohol intoxication. The figures are staggering: if a person has slept for 6 hours comparing to a recommended amount of sleep — 8 to 9 hours, the risk of an accident increases 1.3 times; with 5 to 6 hours of sleep, the risk is almost double; and with 4 to 5 hours of sleep, the risk is 4.3 times higher. If a driver has slept less than 4 hours the night before a trip, the probability of an accident increases 11.5 times.

In 2005, American researchers conducted a study supported by the National Sleep Foundation showing that approximately 168 million adults (60% of all US motorists) have felt sleepy behind the wheel and a third of them have fallen asleep behind the wheel. With the help of a driver behavior AI algorithm, we can ensure more cautious driving and prevent accidents. On top of that, businesses that have adopted fleet management solutions can monitor the safety of their employees. The model not only helps keep drivers alert about distractions and sleepiness but also avoid financial losses for car repairs.

In this article, Andriian Rybak, an expert in data science development services at Lemberg Solutions, describes how to develop a driver behavior system based on Bayesian classification.

Model overview and calculations

The idea for the prototype includes replacing a regular rearview mirror with a smart mirror that would monitor a driver’s behavior using video surveillance and send audio alerts to attract the driver’s attention. Receiving access to a car’s audio output, the mirror will communicate with the car’s multimedia system. These initial prototype capabilities will be developed more extensively in the process of research and implementation. Note that data processing must be performed in real time and the monitoring model should run on a low-power microcomputer.

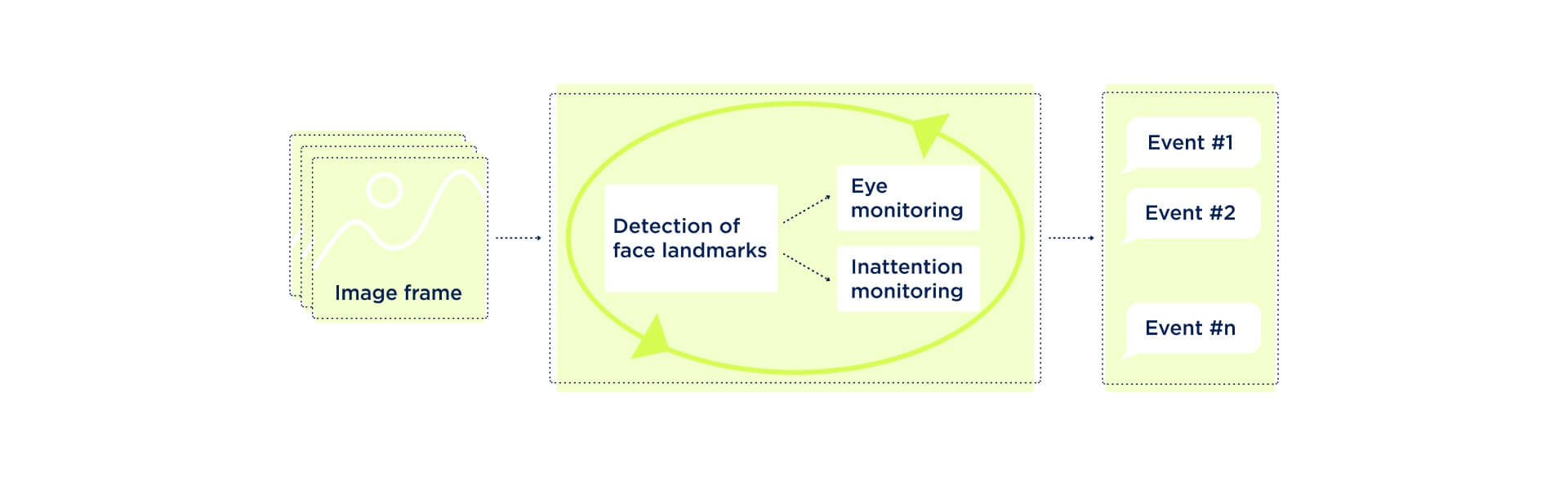

Since the monitoring model will run on a low-power microcomputer, it should use simple and fast data processing techniques. The model consists of eye landmark recognition, eye closing and blinking monitoring, and inattention monitoring.

Figure 1. Driver monitoring system architecture

The system takes an image frame from the video stream in real time and performs facial landmark detection. For this purpose, we applied the modified Histogram of Oriented Gradients method in combination with the Linear SVM method implemented in the Dlib library. Dlib is a modern C++ toolkit with an open source license that contains ML algorithms and tools for creating complex software to solve real-world problems.

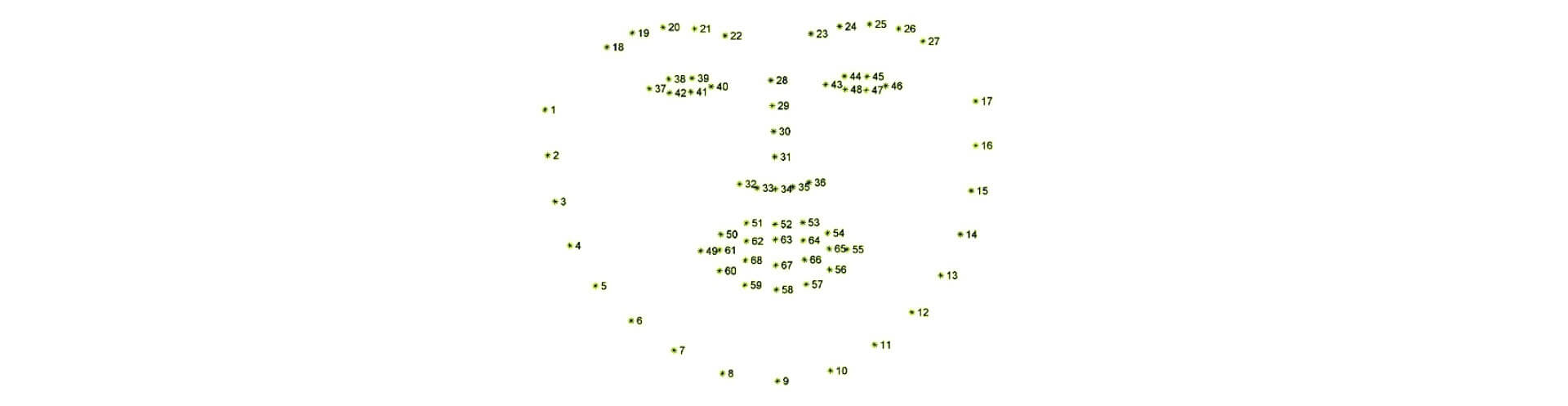

Using Dlib, we developed a face landmark detector returning 68 (x, y) coordinates that reflect specific facial structures. The detector model was trained on the iBUG 300-W dataset and can be retrained if necessary.

Face landmark detector data (fig. 2):

- mouth – [49, 68]

- right eyebrow – [18, 22]

- left eyebrow – [23, 27]

- right eye – [37, 42]

- left eye – [43, 48]

- nose – [28, 36]

- jaw – [1, 17]

Figure 2. 68 (x, y) coordinates for facial landmark detection

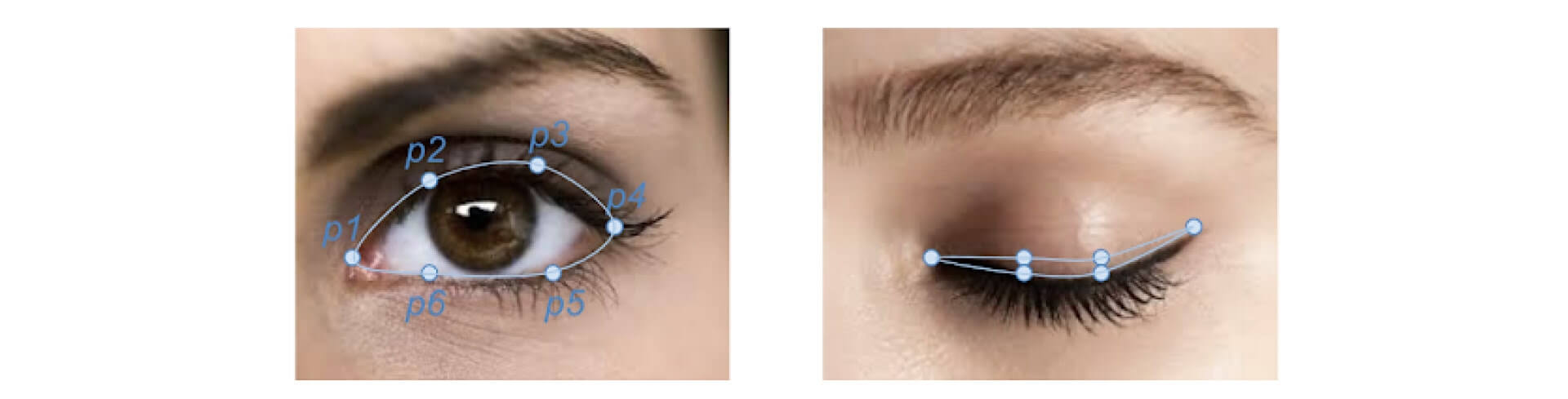

Eyes were the main objects for monitoring. Each eye was described by six coordinates in two-dimensional (2D) space (see figure 3).

Figure 3. The result of eye landmark recognition: open and closed eye

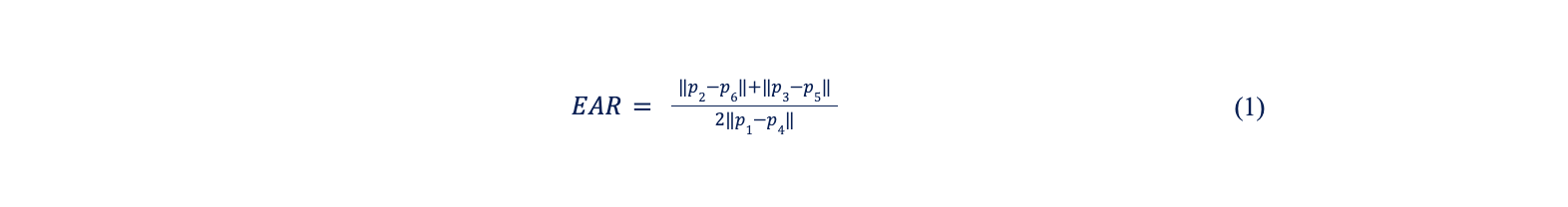

To detect whether an eye was open or closed, we calculated the eye aspect ratio (EAR) between the eye’s height and width (1):

where p1, p2, …, p6 are the 2D landmark coordinates shown in figure 3.

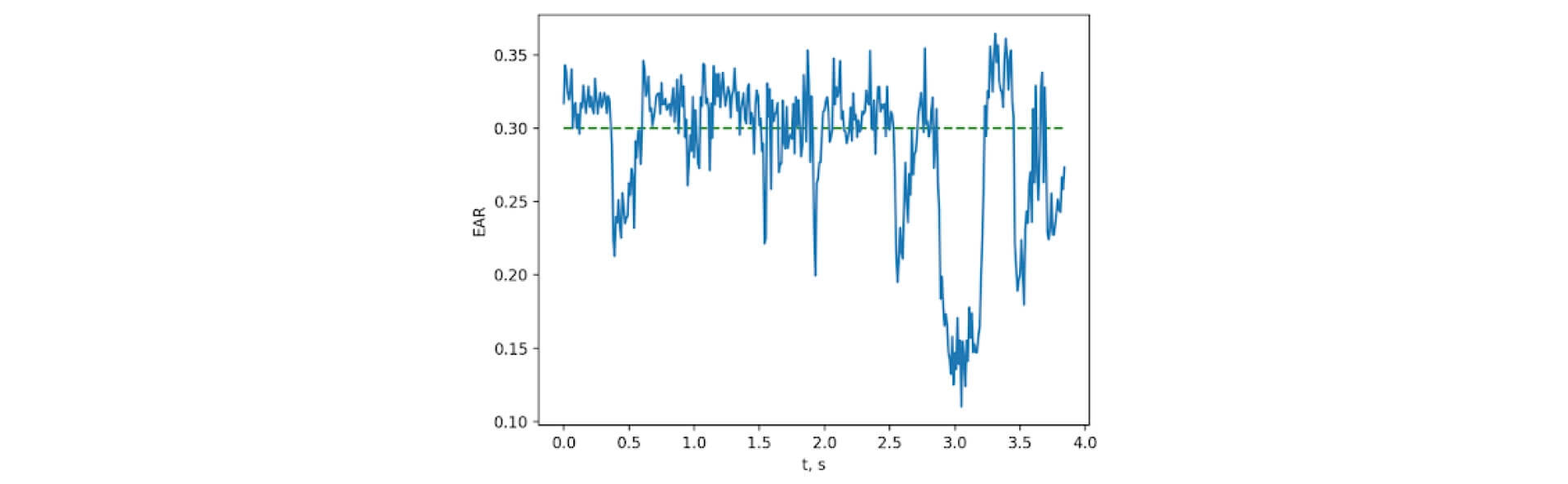

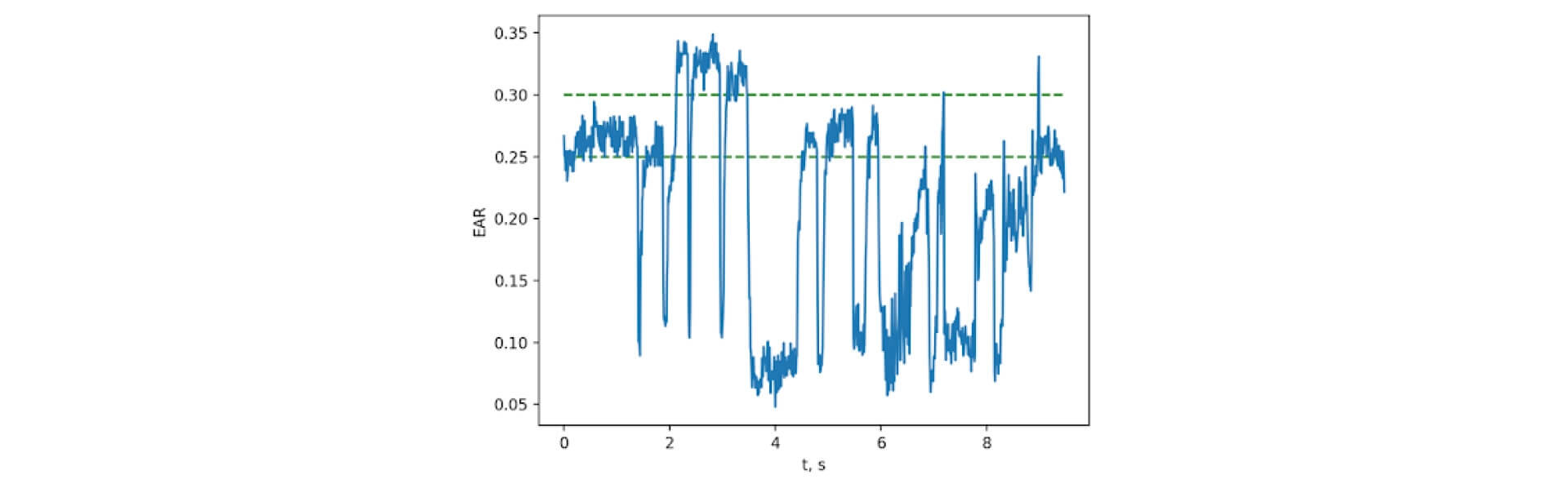

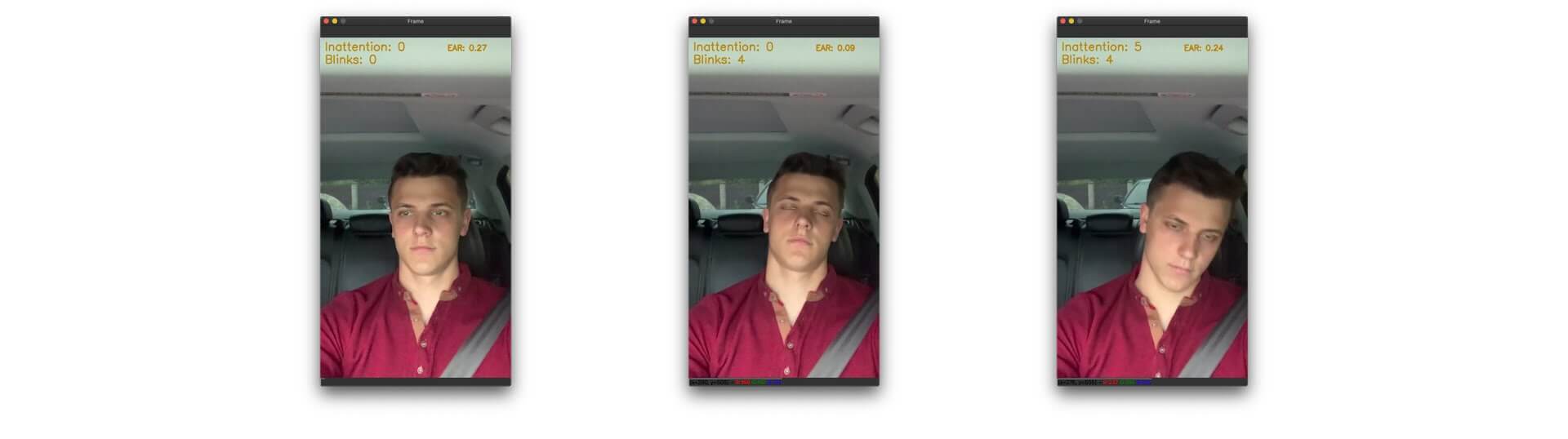

Based on the EAR, we were able to develop a classifier to predict the state of an open or closed eye. To do so, we needed to define a boundary for the separation of two classes (open eye and closed eye). After an investigation, we noticed an EAR day-night fluctuation. During morning driving, EAR for an open eye was greater than 0.25, while in the evening it was more than 0.3. This happens because in the evening, the area of an open eye is smaller than in the morning due to the darkness and frequent exposure to headlights, making drivers squint to focus (see figures 4 and 5).

Figure 4. EAR in the evening

Usually, the blinking frequency of a human eye is constant if there are no external factors. Accordingly, blinking often increases during long-term travels that trigger the desire to sleep. The monitoring model also examines the driver’s inattention while driving. Inattention is demonstrated by long glances in a direction other than the windscreen, sleep, use of smartphones or other devices behind the wheel, and so on.

Figure 5. EAR in the morning

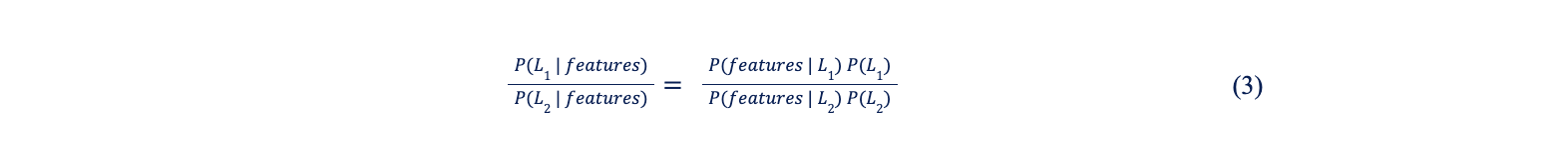

The object of observation includes eye positions in two-dimensional space (x, y). Due to limitations in computing power, we used a simple and fast method of ML classification — naive Bayes classifiers. These rely on Bayes’ theorem, which describes the relationship of conditional probabilities of statistical quantities. Using Bayesian classification, we wanted to find the probability of a label (attention distraction) given some observed features (blinking, eye positioning, and eye direction), which can be written as P(L | features). Bayes’ theorem helped us to express this through the following formula (2):

Using two labels (let’s call them L1 and L2), we computed the ratio of the posterior probabilities for each label (3):

Next, we needed a generative model that specified the hypothetical random process of data generation to compute P(features | Li) for each label. Defining this generative model for each label is the main part of training the Bayesian classifier.

To simplify the general version of such a training step, we made some assumptions about the form of the model. This is where the “naive” in “naive Bayes” comes in. Making naive assumptions about the generative model for each label, we can find a rough approximation of the generative model for each class and then proceed with the Bayesian classification.

We used a Gaussian naive Bayesian classifier for which we assumed that data from each label was drawn from a simple Gaussian distribution and described with no covariance between dimensions. For model development, we also had to find the mean and standard deviation of the points within each label.

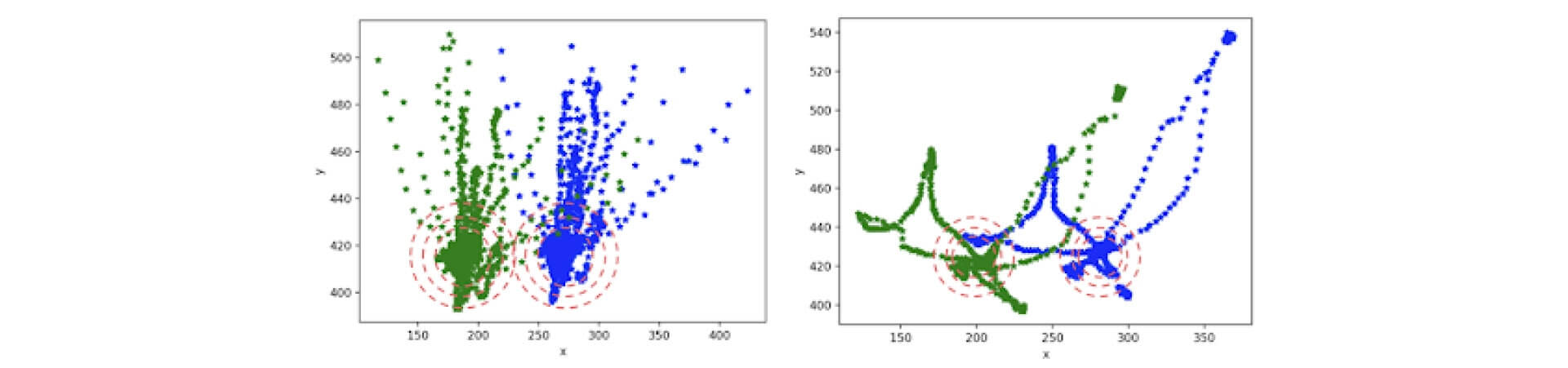

Figure 6 shows the results of the Gaussian naive Bayesian classifier, where the circles reflect the probability levels of the point (eye positions) to the cluster center (a driver’s focus on the road and behavior behind the wheel). According to the probabilistic prediction, we can monitor for driver inattention if a driver’s eye positions are outside the confidence interval (acceptable eye positions when a driver is at the wheel).

Figure 6. The result of the Gaussian naive Bayesian classifier

Testing the monitoring system

Testing the monitoring system

We used the described calculations to implement the driver behavior system. Figures 7 and 8 show the morning and evening results generated by our model. The model uses only the positioning of facial features in 2D space (x, y). However, it serves as a concept for a future system, showing the possibility of research development.

Figure 7. Testing the system in the morning

Figure 8. Testing the system in the evening

Data security

Data security

The system only works offline. Similarly to Apple’s Face ID technology, data analysis is done locally on the device. Data from the camera isn’t stored. Warning statistics are stored locally on the device with the driver’s consent. The driver can prohibit statistics from being collected or delete the entire history even if they previously agreed to record events.

Wrapping up

Wrapping up

In this article, we focused on developing a model that identifies specific features of a driver, relying on their classification to recognize a driver’s behavior while driving. We investigated methods for monitoring the opening and closing of eyes based on the eye aspect ratio (EAR) and detecting inattention using a naive Bayesian classifier. For now, the implemented system is a concept. But it shows the possibility of further research. The main benefit of such a system is that it works offline. In the next article, we will discuss how the system works on a low-power microcomputer.

Tap into data science with Lemberg Solutions

Tap into data science with Lemberg Solutions

AI software development services can significantly expand the ways we use technologies in everyday life. Our team of data scientists and embedded engineers, including Andriian, are always ready to embrace challenging projects and help you develop and integrate a working algorithm with hardware. Get in touch with us via our contact page and we will be happy to lend a hand.