Data-driven decisions are more efficient than intuition-driven decisions, regardless of how perfectly you might think you know the domain you work in. Machine learning (ML) methods enable human-like data analysis and generalization, but they are also hundreds of times more precise and efficient because of the capability to process large amounts of data.

However, there’s another important thing — the quality of the input data eventually determines the quality of your decisions. Researchers cannot expect a valid result if the ML model was trained using poor-quality data.

We talked to Pavlo Tkachenko, our data scientist, and figured out how proper data preparation will help you automate routine business tasks, increase efficiency, reduce costs, prevent problems, and optimize your overall operations. If you’re looking to improve your business processes with data science software development or would like to develop an AI-based solution, keep reading our in-depth guide on data preparation steps.

Data standards: what data is useful?

In the first place, let’s clarify the difference between information and data. Information is any set of facts, while data is information that is ready to be processed. If you need to determine the number of times a specific word is used in a book, you’ll have to transform the paper book (information) into an electronic text (data). Information and data are identical except the form they are presented in.

According to standard requirements, information should be:

Relevant

The information should be useful from the point of view of solving the problem.

For example: should we record and store high-resolution color video if your aim is to detect large moving objects on it in real time mode? For the specified task, you can consider information about pixels’ colors and information contained in excessive image detailing to be irrelevant. Grayscale video of low resolution can satisfy your needs.

It’s important to remember that if you collect and store irrelevant information and don’t use it in the analysis process, you’re simply wasting your money and time.

Accurate and consistent

In general, information accuracy is about the correlation of valuable information to noise (errors) present in it; the more noise (errors), the lower information quality.

Complete

If informational object description is incomplete, instead of interpolatory predictions, the constructed model will produce extrapolative predictions. The quality of extrapolative predictions is less credible than interpolatory predictions.

For example: could a weather prediction model trained on the observations of winter and spring make accurate predictions for the summer and fall period?

Definitely not, because the model does not reproduce the dependencies inherent in the time period that did not participate in the training of the model.

Note! If information is incomplete, it might be useless.

Reliable

Information from two different trusted sources may consist of contradictory statements, which brings about uncertainty and decreases information quality.

ML methods are incapable of differentiating correct signals from the wrong ones. That’s why they try to generalize all available data and find a compromise among the contradictions. However, a compromise means that your model will be affected by unreliable information.

Timely

Information may lose its value if you receive it with a delay. This is especially true when it comes to managing complex systems in real-time mode.

For example: late information from the stock exchange can destroy an investor's strategy.

If information isn't up-to-date, the model won’t reflect the situation accurately. That’s why it won't work efficiently with your current objectives and instead will lead to incorrect conclusions. If the description of the modeling object is out-of-date, conclusions concerning the management of this object also won’t be entirely relevant.

The more noise there is in the data, the more significant impact it will have on the model you're building. The fuller information, the less noise, the better quality in the final model.

Neural networks and other ML methods are considered to be more resistant to noise present in data compared to classic modeling methods. Still, noise is capable of decreasing the model’s quality as well as affecting the decisions made based on its prediction. Noise exists in any information and communication channel; information is a phenomenon, which occurs when there’s a transmitter and receiver with a connection channel between them. For example, in communication systems, natural noise comes from random thermal motion of electrons, atmospheric absorption, and cosmic sources. Your task is to make sure the share of noise is as small as possible.

Similar requirements concern data, as it is the essence of information. The quality of the data used for ML modeling will directly influence the quality of its final results. Information is filtered and transformed while preparing datasets, thus, the factual value of datasets is expected to be higher than the one of the whole information volume. In other words, the informative richness of datasets is more intense than of the information itself.

Even if one of the listed requirements isn't met, it’s necessary to fix the weak points before using the dataset. If you don’t, it can significantly reduce the model quality and affect the resulting managerial decisions. Accumulating information (data) without using it leads to the loss of money and might cause more severe consequences than poor managerial decisions or low-quality ML models. If you can’t use the information because it doesn’t comply with standard requirements, you’re simply throwing your money away.

High-quality information is capable of increasing income, while low-quality information most probably will lead to losses for your business.

If you don’t have a plan on how you’re going to use the information, collecting mediocre information won't bring any positive results. Before starting the collection process, you should realize how it will be applied and which benefits you can expect to get.

Data collection process

Data collection is the initial step of the data preparation phase. There are two possible ways to acquire data:

Load/buy from open sources

Some types of information are sold or can be downloaded online, free of charge. Oftentimes, you won’t even need to supplement the acquired information. However, it’s crucial to make the most of available information and transform it into high-quality datasets. Additionally, you’ll have to preserve all useful components present in the initial piece of information and exclude any noise that is likely present in it.

Collect all necessary information on your own

Collecting the description of the object on your own presupposes formalization of the information collection procedure. This means defining clear rules concerning:

- Which information are you collecting?

- In which way are you collecting it?

- How are you going to validate its quality?

Then, you define how the parameters of the object should be described using numerical variables. Mistakes made in the process of data collection can lead to its limited applicability or even complete inoperability. As a result, you’ll have to conduct additional iterations in the process of information collection.

To avoid these mistakes, try to think through every detail beforehand and get everything right on the first try. Each mistake equals budget losses. If you’re building an IoT product, you should predict in which way you’ll control data versions in case the hardware part of your product is modified in the process of data collection. That’s why you have to organize the whole infrastructure, ensuring trouble-free collection of the data and research of the object under the modeling description. Remember, if you collect an incomplete dataset, then in the future you’ll have to start the whole collection process over.

Let’s take a look at an example:

Lemberg Solutions’ data science team built an ML model for the valuation of real estate in Copenhagen for one of our clients. Information about thousands of office spaces was used while building the model. Every single office space was described with a large number of input parameters.

One of these parameters was the availability of a washroom in the office. As the data we used for building the model was created by users, it was obvious that not all of them approached the fill-in process consistently. In many cases, they didn’t note the availability of a restroom on their premises, while in reality, the lease of facilities without a washroom was extremely rare.

Because of the users’ inattentiveness, this parameter was filled in correctly in only half of the cases. Later, we noticed that such mistakes were more common among cheap office spaces. When we started to build the model, the variability of values for this parameter was assessed by the model as a relevant input from the dataset.

The model allocated some weight to this parameter. As a result, in one of the experiments, we determined that the availability of a washroom was a decisive parameter for lease price formation.

However, this statement didn’t correspond to reality, and due to a major error in the input information, the model produced a wrong result. Missing out on such factors can lead to incorrect work of the model and the production of incorrect results.

How can Lemberg Solutions help with data collection?

When it’s impossible to buy or find the necessary dataset in free access and you have little or no experience in collecting information on your own, delegating this procedure is the most viable option.

Lemberg Solutions is a reliable data science partner with a vast experience in data collection for our clients. To provide some context, for one of our clients, HorseAnalytics, we had to collect a dataset that would enable the ML model to detect the type of horses’ allure: trot, gallop, step.

To collect the dataset, we created an Android app that would gather information from the sensors of the accelerometer and gyroscope. After researching versatile attachment options, we determined the most efficient method of fastening the smartphone to the horse — sewing a pocket to the saddle lining and putting the smartphone inside.

The app was dictating instructions to the jockey to ensure we received valid data about the horse’s movements. We determined each horse’s characteristics and realized how much data should be collected. The difficulty of data collection lay in the diversity of horses — we had to analyze racehorses as well as common horses.

It was impossible to buy such a specific dataset.

Another example is an automated system for pig-weight monitoring. We developed a method for contactless measurement of pigs’ weight using a stereo camera. Though similar datasets with pigs exist, the body structure of each of the pig’s breed is unique; the proportions and weight depend on the breed heavily. That’s why we had to collect the dataset on our own.

First, we collected photos of the specific pig species bred by our customer. Afterward, we determined the correlation between the physical sizes of the pig, the proportions of this particular breed, and its particular weight.

The collection process is oftentimes unpredictable — while gathering the dataset, we changed the core source of information amid the process. We also changed the manufacturer and type of the stereo camera we used for collecting photos. Well-organized collection processes enabled us to utilize the data from the first device, as well as the information received from another version of hardware to the maximum extent possible.

Usually, customers have a broad vision of their products and plan to enhance their projects in the future. They don’t wish to merely solve one problem and forget about it. Instead, they aim to improve, extend, and scale their business processes. That’s why it’s important to predict and collect all potentially useful data from the start. The value of such an “extended” dataset is much higher. Lemberg Solutions can help with all sorts of data collection consultations at any stage of your process.

Data preparation methods

Data normalization

If your dataset was collected using versatile information sources, it is likely some parameters might be described using different measurement units.

For example, the temperature can be collected in either Celsius or Fahrenheit. Thus, to use the temperature values we have to make them uniform in either the Celsius or Fahrenheit indexes.

Before using a dataset in the process of modeling, each of the input parameters should be normalized. This means you need to bring all values of these parameters to the 0-1 range.

Example: You have a dataset describing anthropometric parameters. Weight measurements will range from 50 to 100 kg. If we speak about the height, it will most probably range between 150 and 200 cm. Age (if we consider adults) will be in the range of 15 and 90 years. Each of these parameters is described within a different range.

However, you can increase or decrease the ranges of your values depending on your needs, e.g., let’s assume the height is measured not in centimeters but in millimeters. Then, you can multiply the indexes by 10 and the range would become 1500 to 2000 mm.

If your data enters the neural network in the form you initially received it, there’s a high probability that these parameters with larger numerical indexes of the valid range will have a greater impact on the formation of the neurons’ weights.

You don’t know which parameters are decisive (most informative) for building the model from the start. To make sure the model is valid, all these parameters and values of each separate parameter should be divided by the maximum value for each of the determined parameters. As a result, you’ll receive similar ranges for each of the parameters — from 0 to 1.

Based on the variability of these parameters, the network decides what weight should be allocated to each of them. The initial scales won’t determine the importance of parameters.

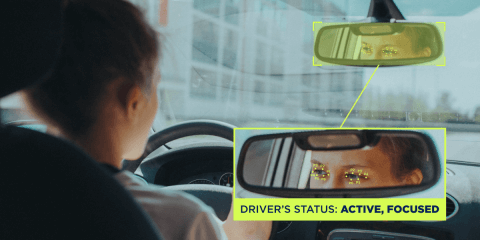

Data labeling

The majority of practical tasks can be solved using ML methods, which refer to the paradigm of supervised learning. To train a neural network, you need a multitude of training examples (data) where each vector of input parameter corresponds to a specific value of the output parameter.

For the classification task, the class label will be such an output parameter. The arrangement of labels is one of the data preparation process steps and is called data labeling. Usually, this task is solved manually or in a half-automated mode. The quality of the labeling process determines the value of the resulting dataset.

If class labels were arranged carelessly with errors, and these errors weren’t detected at the stage of dataset validation, it would lead to a loss of dataset quality.

To label your dataset, you don't necessarily need a highly-qualified expert. In many cases, it can even be done by students.

However, you won’t get the desired result if this type of work is performed without:

- Supervision of experts

- A correct definition of the labeling procedure

- Verification of the labeling quality

Lemberg Solutions has strong expertise in data labeling, as well as other data preparation steps, and will help you with each of them.

Detection of abnormalities and data cleaning

Data cleaning is another crucial step of the data preparation process you should perform before training the model. Several methods enable the detection of abnormalities in the dataset. Abnormalities are features that strongly stand out from the overall pattern of the dataset with no logical explanations for their divergence. At this point, your key task is to find the explanation for the variance.

If you come across an abnormality and manage to explain it, you can verify the accuracy of the data, as the divergence you observed wasn’t an error. This knowledge will provide you with a better understanding of the modeling object.

However, if you don’t find explanations, it’s an error. For example, a manually entered value was incorrect — instead of 1500 euros per month, the operator had entered 150 or 15 000 euros. Such abnormalities should be excluded from the training dataset to make sure they don’t harm the model.

Data omissions (missed values) are also a common issue in datasets. Let's say an object is described with 20 parameters and 5 out of them are left empty. Most ML methods are incapable of working with such deficient data. Thus, there are two possible solutions in this case:

- Delete each record where one of those 5 values is omitted

- If real values of the attributes are available only in the minority of records, you can delete these input parameters (attributes) completely

Incomplete data can be interpreted in 2 ways:

- Lack of an information component about some parameters. For example, you’re modeling a meteorological forecast system. However, your model doesn’t utilize the humidity parameter, even though it’s necessary for this type of model. In such cases, you’ll have to collect this lacking parameter.

- Individual incomplete records. You’ll eventually have to exclude them because ML methods don’t allow you to use incomplete vectors.

Data preparation insights

You can come across 2 possible opposite situations while preparing data:

1. Your dataset is strictly limited because it’s impossible to collect more information. In most cases, it concerns rare situations (a catastrophe), social and economic domains, or outdated information about events that occurred years ago. Some social experiments are considered unethical; thus, it’s often impossible to collect some sorts of data.For example: you need to create a system for early diagnosis and prevention of electric vehicle battery fire incidents. To collect the dataset and detect signs that affect the probability of the battery catching fire, you should ruin many batteries while observing the change in the parameters selected for monitoring. Obviously, the dataset will be limited, as its total cost will be determined by the number of batteries burned.

A limited dataset creates a problem — you’ll eventually have to find a method of ML that will be able to build a reliable model in conditions of a limited-training dataset.

2. The opposite situation is when you have an extensive data volume — where hundreds of millions of records describe the object. Such data volume can’t be processed within the limits of acceptable time intervals using classic methods. Thus, you should reduce the general dataset to the training dataset with a processable volume that reflects all the dependencies contained in the general dataset.

Why delegate data preparation for your project to Lemberg Solutions

Ensuring that the same professionals working on your data collection would then proceed with building the ML model, and also utilizing the dataset they’ve already collected, is a key to a successful project.

In the opposite case, where data is collected by one team with their specific vision, and on the next stage is transferred to another team, the final result might be disappointing.

In case you collect a dataset on your own, try to manage this process according to recommendations from modeling experts and under their supervision. The quality of collected data should comply with the verification procedures determined by the modeling or ML-methods experts. This is the only option to avoid potential inconsistencies between the expectations of the data-modeling experts and the experts responsible for data collection.

Lemberg Solutions recommends considering advice from experts carefully even before starting the data collection process. This way, you will be able to save your budget and time when you avoid collecting low-quality data.

LS has extensive experience in AI software development services and collecting, preparing, and processing data for versatile data science projects. To learn more about how our services and expertise can help your business, simply contact us via the form, and we’ll get back to you shortly.