The newly emerged EU AI Act will impact the healthcare industry in the upcoming years. New requirements for technical documentation, risk assessment, data quality standards, and human oversight are just a few of the things to expect. Keep reading to discover the key changes you need to know.

Responsibilities: What AI medical device providers need to know

According to the EU AI Act, those who develop, place high-risk AI systems on the market, or put them into service under their name/trademark are considered providers.

They have the most responsibilities compared to other businesses in the AI value chain. Their responsibilities include:

- Identifying risks and ensuring they can be addressed

- Maintaining the quality of training, validation, and testing data

- Preparing technical documentation and keeping it updated

- Enabling AI systems to record events over the lifespan automatically

- Enable transparency in AI systems for its users

- Assuring human oversight

- Making AI systems accurate, robust, and secure.

Understanding your responsibilities will make your transition smoother and help you avoid financial losses associated with the EU AI Act’s fines. To delve deeper into the EU AI Act’s regulations, explore our related article with all the essential information.

New authorities

The compliance check will be carried out by national competent authorities, including a notifying authority and at least one surveillance authority in each Member State. In addition, the AI Office, a new EU-level regulator, will oversee and enforce the requirements outlined in the AI Act.

Gap analysis and integration challenges

The EU AI Act introduces additional requirements to the existing industry regulations. Still, it is important to consider EU Medical Device Regulation 2017/745 as the primary regulation for medical devices. Hence, AI and its documentation are evaluated as part of the medical device.

Conducting a gap analysis and determining amendments to technical documentation will be necessary for all healthcare industry businesses. For instance, you may need to provide a description of AI classification, potential harm analysis, and a plan for human oversight.

While the EU AI Act provides a detailed description of product documentation components, integrating all regulations may pose challenges and require more time. Therefore, it is essential to take action as early as possible.

High data quality standards for healthcare development

The artificial intelligence law introduces a set of quality criteria for training, validation, and testing datasets. In particular, datasets must be relevant, sufficient, and, as far as possible, error-free and complete.

With the EU AI Act, you may need to be more attentive to data collection methods and properly document the purposes for which the data is used. Plus, your datasets should cover a wide range of diverse samples and edge cases to avoid biases and discrimination. All datasets must be current and up-to-date to ensure accurate results.

More valuable data for malicious actors

As the amount of high-quality and sensitive data increases, it will become more attractive to cyberattacks. Cybersecurity is one of the requirements already stated in EU MDR GSPR.

For now, the European Union has consolidated cybersecurity provisions in MDCG 2019-16 Guidance on Cybersecurity for medical devices. However, different countries might provide specific cybersecurity requirements.

To avoid data leaks and related financial loss, you might need to conduct more research to identify the dataset’s weak points, determine other potential problems, and consider how to minimize cybersecurity risks. Additionally, the testing phase might require a bigger budget.

Healthcare development and human oversight

High-risk AI systems need effective human oversight during development and usage to minimize health, safety, and fundamental human rights risks. Implementing a detailed plan for human oversight can accelerate your compliance process and prevent costly errors. For instance, experts can regularly analyze artificial intelligence’s decisions to ensure they are appropriate. Meanwhile, data science teams must use validation datasets to evaluate the system's ability to make specific decisions.

Five expectations for your AI development company

Although the primary responsibility lies with the provider, there are several expectations to have from your AI development partner:

- Having a comprehensive approach to AI development

- Understanding which documents can be used to describe AI, additionally having specific templates

- Considering the AI documentation requirements of the provider

- Paying attention to minimizing cybersecurity risks during development and testing.

It’s crucial to select a company with prior experience in developing AI products and a good understanding of the challenges and requirements of the healthcare industry. In our video, you will discover more about the collaboration phases with a vendor necessary to create an effective AI tool.

What's ahead for the healthcare development

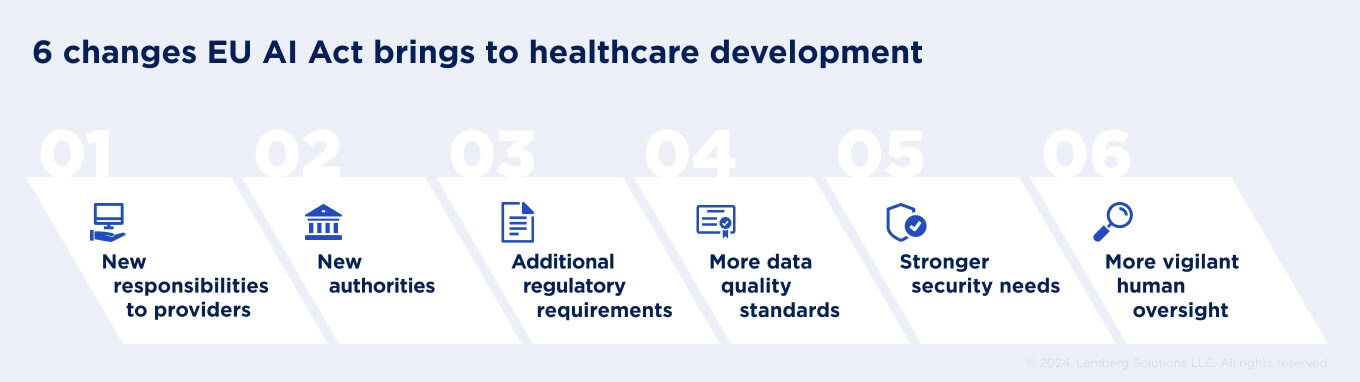

The EU AI Act may bring several changes to the healthcare industry in the upcoming years:

- A wide range of responsibilities to providers

- New authorities running AI EU Act compliance check-ups

- Additional requirements to existing industry regulations

- More data quality standards

- Necessity to implement more robust cybersecurity measures

- More vigilant human oversight.

Having a trusted AI development partner will help you build your healthcare product more easily. With over 15 years of experience in AI development for the healthcare industry, Lemberg Solutions complies with all necessary regulations.

Contact us to discuss turning your project vision into a market-ready product.